OHA Chatbot

The application Honushield allows a user to login and update their COVID status for the day. They are prompted with questions related to how they are feeling, whether they are experiencing any symptoms, such as fever, cough, etc., and if they have been recently exposed to anyone with COVID. One other important feature this site provides with the ability to upload their vaccination card. A lot of events ask for verification of vaccination status and this application fills that need. This is incredibly useful as the University of Hawaii campus requires students and faculty to be vaccinated or show proof of a negative COVID test.

Expected user experience does not guarantee actual user experience. Lesson learned. But this is also why we test before deployment.

For this Hawaiian Annual Code Challenge, my team and I built a chatbot for the Office of Hawaiian Affairs. Their problem was that they were receiving a large amount of phone calls, and had a difficult time handling them with their small team. Thus, they wanted a chatbot. We used Google’s DialogFlow to develop the chatbot itself, routed it through Kommunicate, which is a hybrid help desk program, and deployed it to our site.

Here is what I worked on personally. I did the initial system design and researched the pros and cons of the programs we could use. After that, I looked into the docs to develop a solid foundation of best practices for each component, and conveyed that information in a condensed form to fellow teammates. In the end, I connected all the components, deployed our solution, and maintained it throughout development. Being the team captain also came with the responsibility of additional “paperwork” related to registration, planning, and generally keeping the team on track.

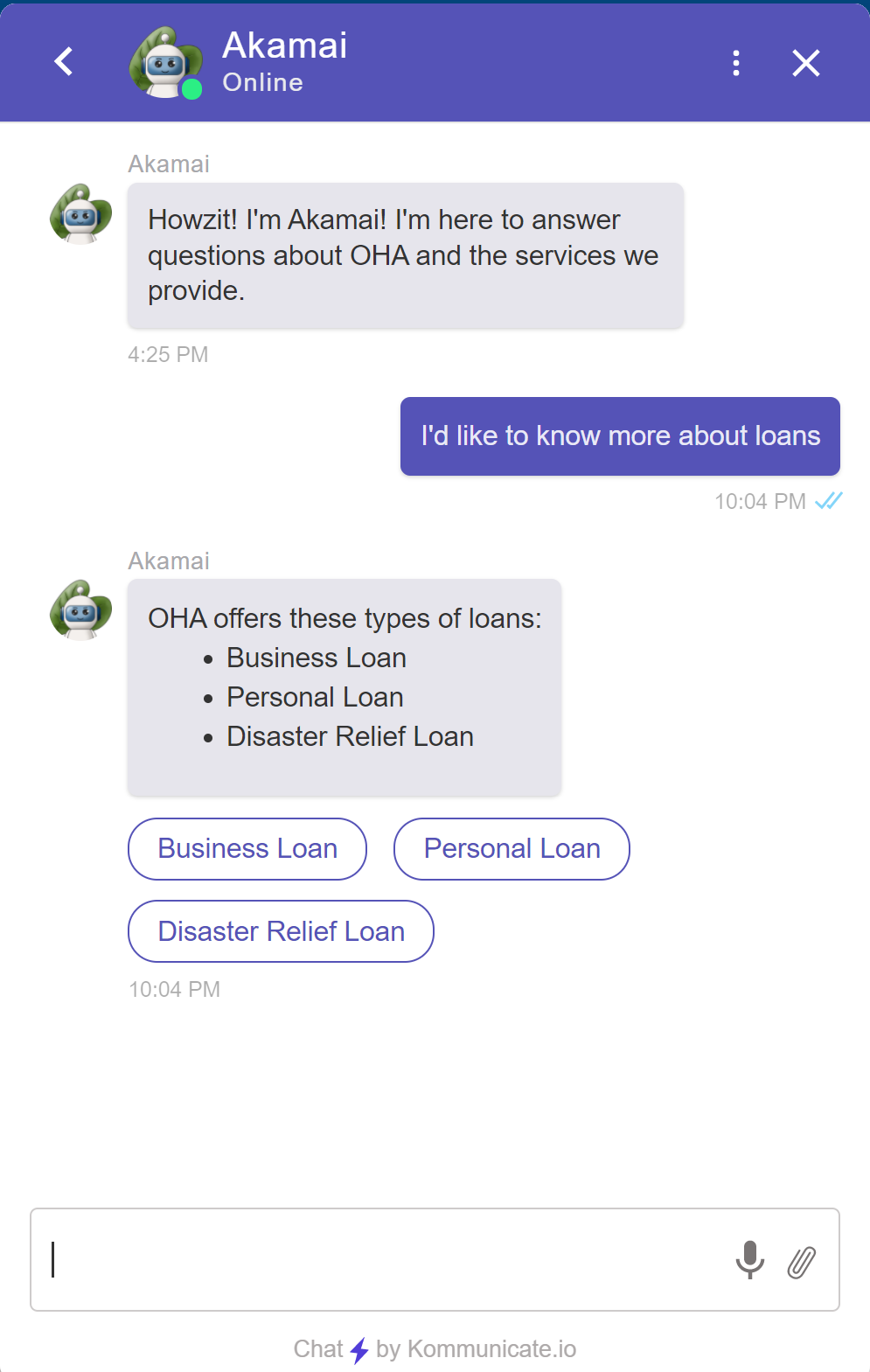

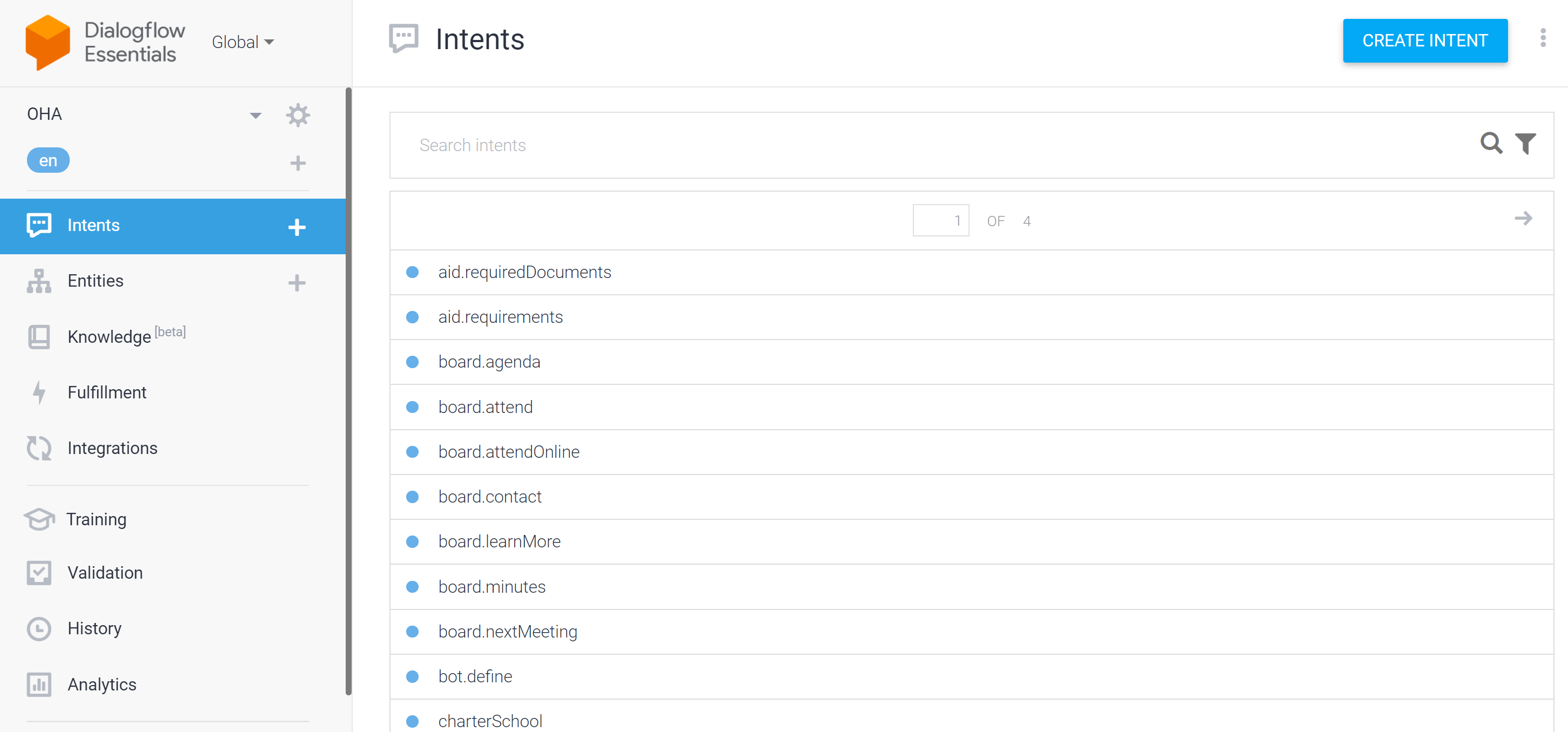

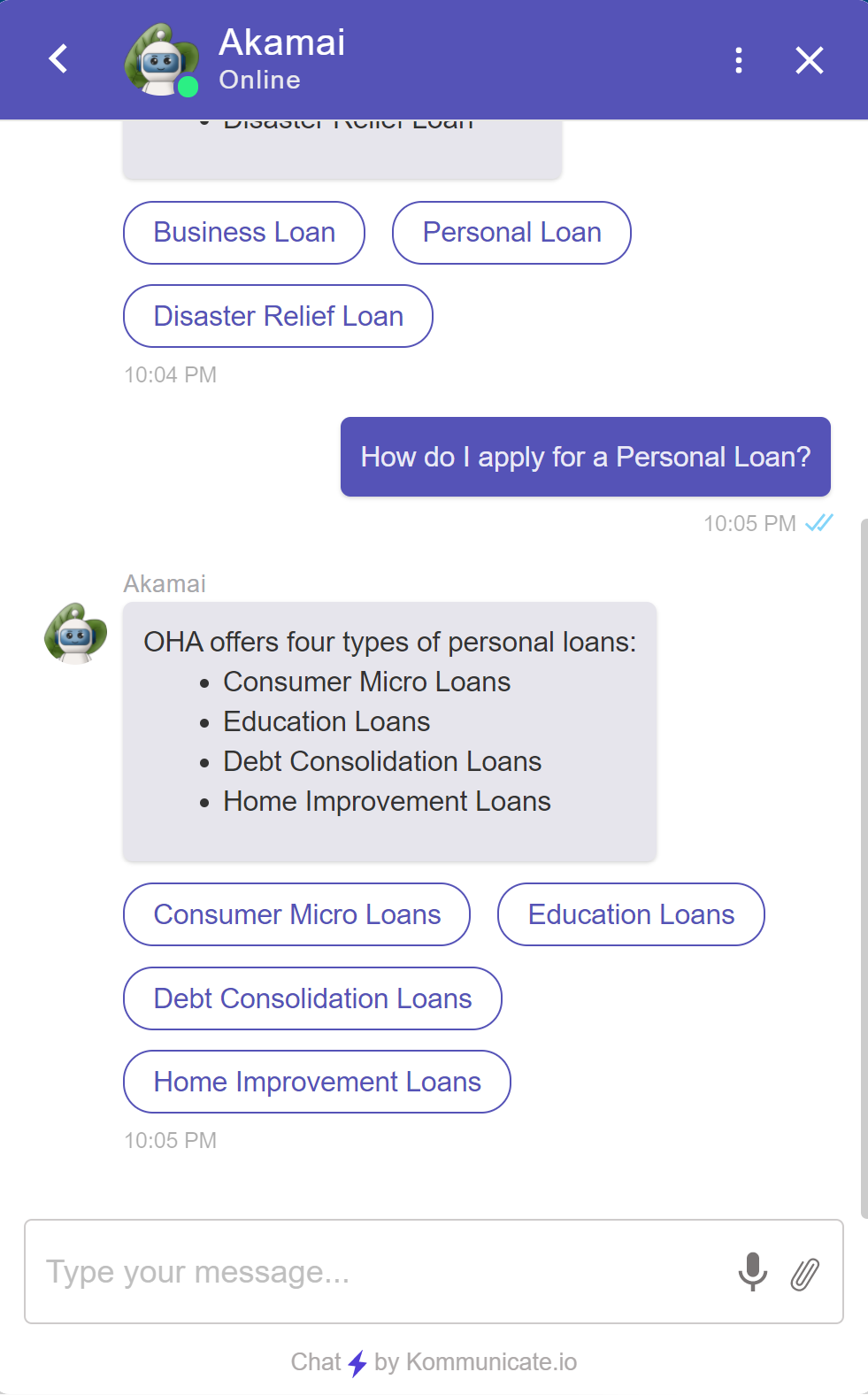

When a user goes on our site, they will be greeted with our chatbot named Akamai. I developed the “intents” for this. Intents are defined as end-user’s intention for one conversation turn. For example, if a user asks “How are you”, you program the chatbot to reply with “I am well, thank you”. This is one intent. My teammate and I split up the work, creating intents for the frequently asked questions that the OHA organization receives.

However, we ran into a massive issue. We came to find out the hard way that we designed the product wrong. This is how we expected the users to use it, and how we were told users will expect it – by asking the chatbot questions similar to the FAQs we were provided. Backstory: we were given a sheet of common questions as a knowledge base the bot should know. It turns out, no one used the chatbot that way. No one asked a single question from the FAQs. In fact, no questions were asked at all! No sentences either! Users stated words, phrases, misspelled words, and things completely unrelated to the site they were on.

While we were expecting them to ask “What kind of scholarships does OHA provide?” They could say “located?”, “lanai” (balcony) or my favorite, “repeat”. Why would anyone ask the chatbot to repeat itself when you can simply look at its latest reply…?

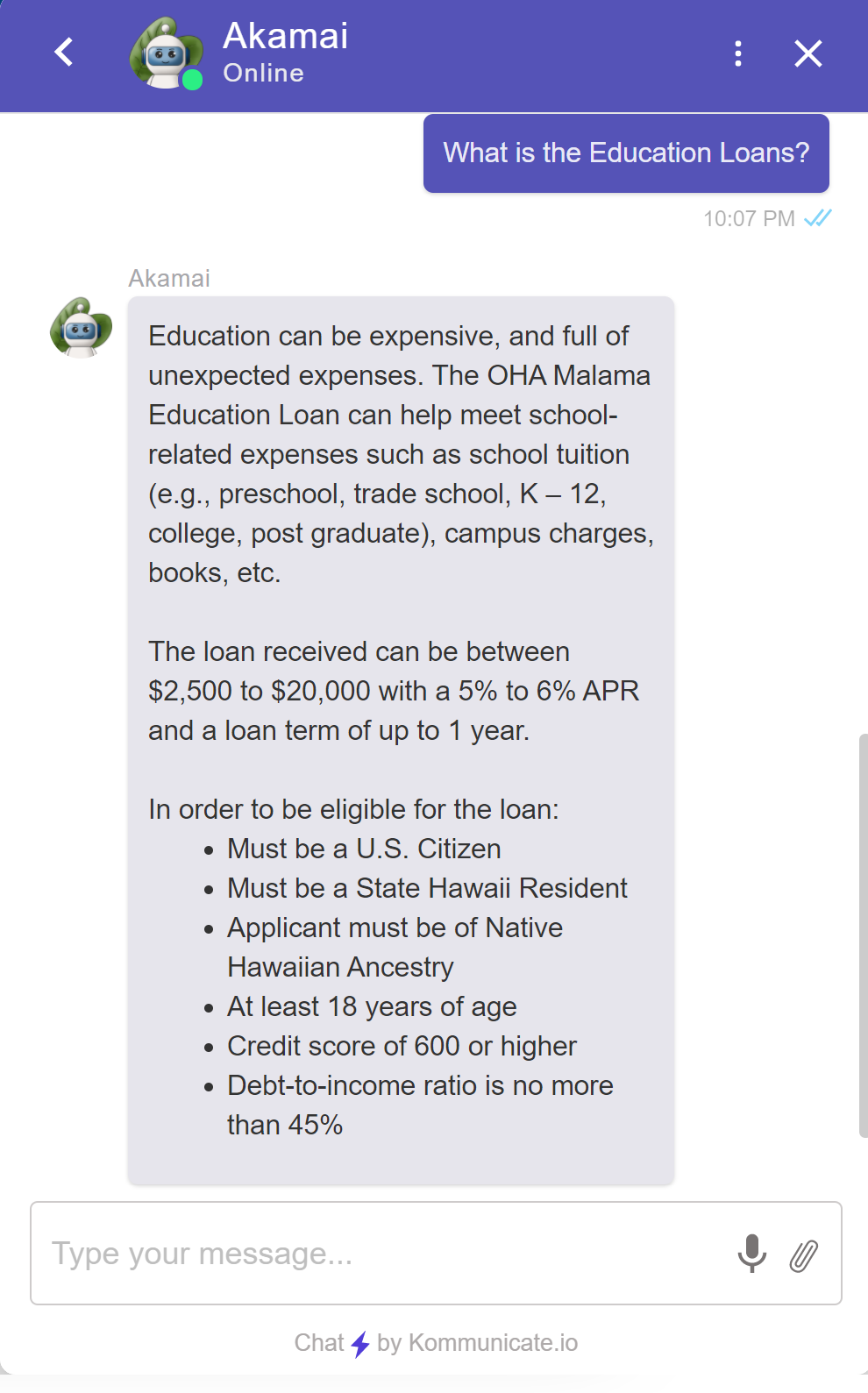

We came to find out we needed a more general approach. Users can ask a highly generic and possibly unexpected questions, and we needed to guide them to find out exactly what they needed. For this, I implemented tiles in the chatbot replied. Tiles are automatic replies users can click on to get the information they need. For example, a user can say “loans”. This is great because it gives us a topic to work with. The chatbot will reply a bit about the types of loans OHA provides, such a “personal”, “business”, or “disaster relief”. If a user seeks a business loan, they can click on the “business loan” tile and the chatbot will reply with several types available, and a bit about each one so that users can be further guided to their exact needs.

A user study before starting the project would have prevented this and saved us time. However, I am also glad to have encountered this issue because I learned from it. To further remedy this issue, the chatbot was retrained on not just OHA’s frequently asked questions, but also their entire website. One other team member and I went through all their webpages, pulling relevant data and creating intents for them. A tedious process, but it vastly improved the amount of statements the chatbot could reply to.

In conclusion, expected user experience does not guarantee actual user experience, and you should expect that.

Here are some screenshots of the chatbot in action. A user is requesting more information on loans.

The chatbot is providing some general info, and prompting the user for more information to determine their exact needs. This is provided through tiles that a user can click, and a reply will be automatically sent for them.

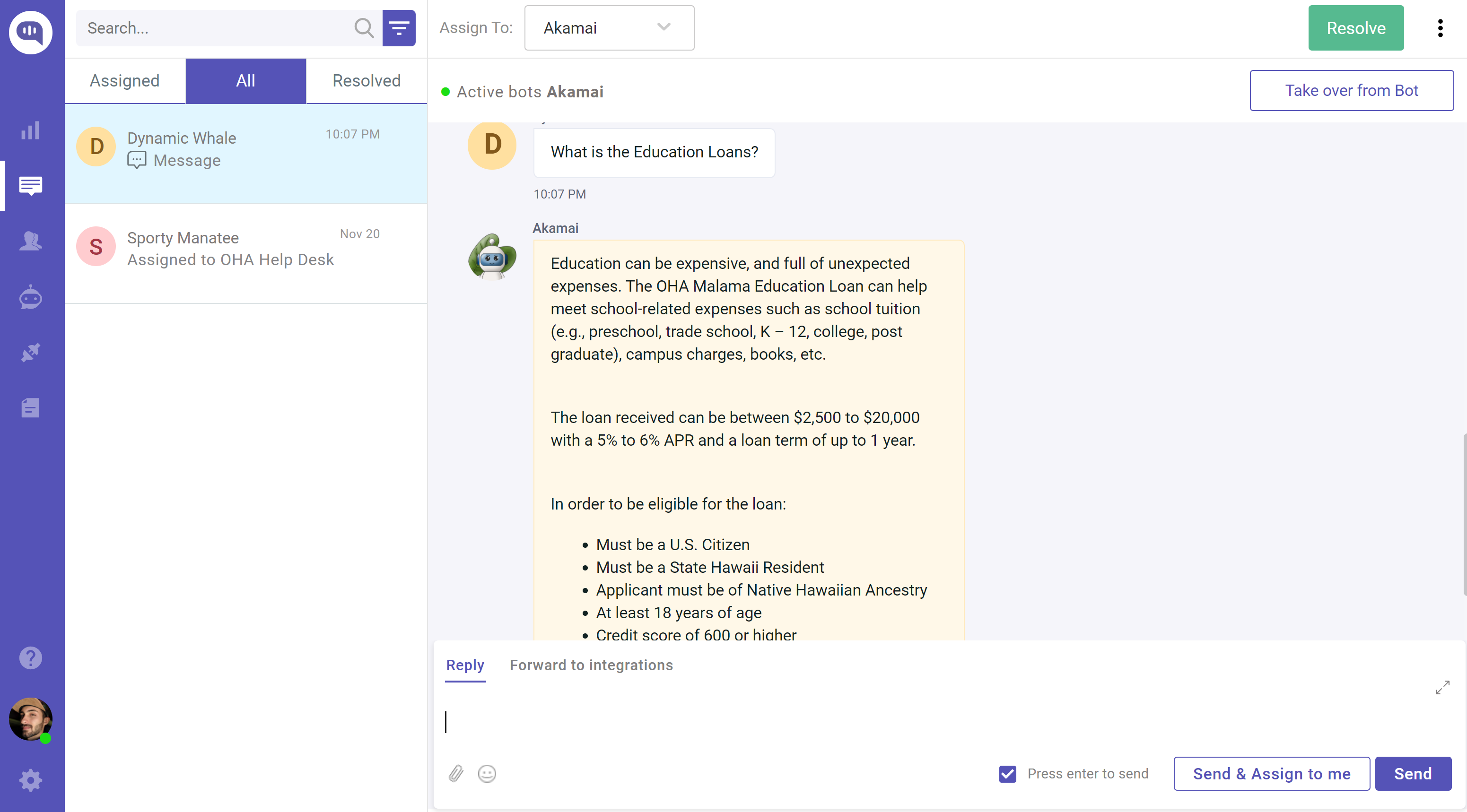

In the Kommunicate console, we can see all the connections made to the chatbot. If the user requests an agent, a real person will be able to talk to them! (If available).

And here is one of the many intent pages that the team and I made.

:)